I was using a compressor (DynamicRangeController) to condition the input to a distortion (InputOutputCharacterstic). I also had a wet/dry mix using a crossfader. But when cross fading between the wet and dry mix I could hear an appreciable delay in the wet signal of about 100 - 200ms. It's the compressor that is causing the delay and it's irrespective of the Attack and Release time settings.

Here's a recording demonstrating the delay: http://kyma.symbolicsound.com/qa/?qa=blob&qa_blobid=5713368294979155934

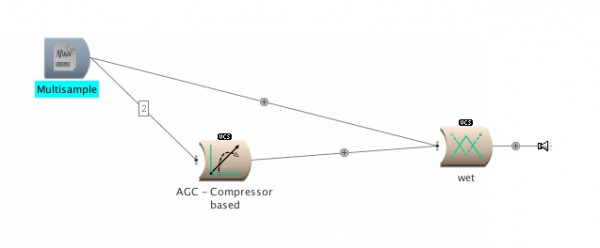

This is the Sound diagram of my simplified Sound just going through the compressor:

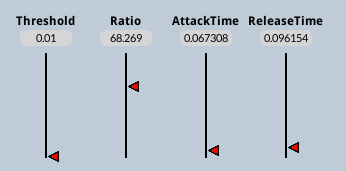

And my parameter settings:

Is there any way to avoid this delay?

Maybe I should ask this question differently. What I'm trying to do is implement an automatic gain control as the input to a distortion effect, so quiet signals get boosted and then clipped just like loud signals do. I thought the compressor would be a way of doing that but if it's got an inherent delay of 140ms perhaps there's a better way of doing that?