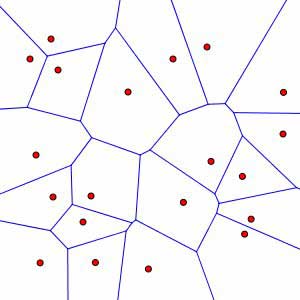

Hi, I'm interested in exploring a possible approach to sound morphing, where the output of an external machine-learning algorithm (a Kohonen self-organizing map) might resemble something like the diagram below, where each digital audio file in the input set ends up as one of the red dots below. Any of the neighboring sounds/dots to any selected sound/dot will have a higher likelihood of similarity to the selected item, according to the chosen feature metrics used to analyze the set of sounds.

I've encountered a 2D morphing example in Kyma, where each of four sounds are placed in each of the four corners of a square, enabling a real time morph between all four sounds to be controllable via an XY-pad or any set of two controllers.

My question is:

Using Kyma, in order for a morphing process to accommodate an input set of sounds whose 2D similarity representation can be viewed as a Voronoi diagram like shown below...

(a) would it make the most sense to choose a quadrilateral of the four closest points to any point (where one of the points is the identified/selected point), and then treat those four points as the four points of the regular 2D morphing square (with or without a 2D transform to inverse skew the particular 4-sided shape into a square). The choice of quadrilateral would need to be selected separately, via a tablet for example.

(b) or is-there / might-there-be a way to morph between more than four sounds at once, as long as a weight value was provided for each sound, with all weights adding up to 1.0

(c) what else am I missing?, or any comments/suggestions are eagerly welcomed

Thanks,

Thom